Lemme tell u about this thing that's happening on the Internet

Fun Theory Introduction

People use the term "the Internet" to refer to quite a few rather heterogeneous things these days. Everyone seems to be aware that it is, in some vague capacity, infrastructure. Moreover, people say that social and cultural phenomenon and crises happen "on the Internet." It's been a minute since I heard anybody refer to interesting political developments happening "on the highway" or "in water treatment system" (this was originally written prior to Canadian trucker's occupying key highway points and urban centers). No doubt there are important developments in these areas and even political movements that are using these infrastructures as a means of maneuvering. I'm thinking of the farmers movement in India using the highway for mobilization and disruption, or the Flint, Michigan water crisis as an infrastructure in crisis. While these movements are no doubt defined by the infrastructure that they mobilize on and around, the Internet seems to be a platform of and for many more crises and events than the average piece of infrastructure is.

"The Internet" is characterized as the medium on which most "things" happen these days. From the newest mediocre Netflix show or movie that everyone wants to talk about, to information / cultural / discursive warfare, to the actual infrastructure hiccuping and no longer working, to politicians embarrassing themselves or their constituencies, a wide variety of events happen "on the Internet," usually in the form of crises. These crises may be ones of individuals trying to (dis)identify with a cultural artifact, political crises that are played out through snarky tweets, or military crises where everyone using the Internet becomes a soldier in the networked information war happening in parallel to the kinetic war. It has become apparent that the increased communicative capacity enabled by the Internet, or at least the platforms that define what most people experience as the Internet, often results in an antagonistic, almost tribal impulse by those using it.

My hypothesis is that these multiplying and compounding crises are a function of the disorienting quality of the space that the Internet is constantly creating and re-creating, as well as the uncertainty over how governance can and should be applied to this infrastructure that is both international and supranational. An at the time insightful yet deranged, now only deranged, and distinctly British prophet of our current age gives us an impression of this tendency:

"Deregulation and the state arms-race each other into cyberspace. ... Capital is machinc (non-instrumental) globalization-miniaturization scaling dilation: an automatizing nihilist vortex, neutralizing all values through commensuration to digitized commerce, and driving a migration from despotic command to cyber-sensitive control: from status and meaning to money and information. Its function and formation are indissociable, comprising a teleonomy. Machine-code-capital recycles itself through its axiomatic of consumer control, laundering-out the shit- and blood-stains of primitive accumulation. Each part of the system encourages maximal sumptuous expenditure, whilst the system as a whole requires its inhibition. Schizophrenia. Dissociated consumers destine themselves as worker-bodies to cost control."Not the most pleasant prose, I admit, but Mr. Land captures the frenetic, libidinous, anxious energy that the Internet has managed to unleash and seems to thrive on. What stands out to me in this passage is the telescoping of perspectives and contradictory conditions that "cyberspace" activate. Planetary scale "globalization" is "minituriz[ed]," with each individual part of the global system pushing its Users towards excess in order for the system as a whole to become meta-stable through "inhibition."

Nick Land wrote this piece entitled "Meltdown" in the early 1990s, only a few short years after the Internet became consumer friendly in the form of the World Wide Web. Before becoming the neoreactionary he is today, Land had a William Blake-like sense of things to come, as well as the benefit of not having to exactly elucidate what he meant by what he wrote (not that it is likely that he remembers actually writing it). His impressionistic description of the information revolution and its consequences for the human race only gives us a emotive sense of what will have, and has since, transpired, not a clear road map: a Cold War between state and market that is beneficial for both supposed antagonists; data producing wage-workers consuming digital goods devoid of value; a final movement from societies of command and discipline to societies of control. Not a pretty place, but one full of useful ideas to work with and against.

A more sober, yet similarly powerful, perspective on the consequences of ubiquitous planetary scale computation and interconnection is Benjamin Bratton's:

"My argument then is not another prophecy of the declining state withering away into the realm of pure network, but to the contrary, that the state's own pressing redefinition takes place in relation to network geographies that it can neither contain nor be contained by. As cloud-based computing platforms of various scales and complexities come to absorb more and more social and economic media, and do so on a planetary scale, the threads linking one data object to one jurisdiction bound to one geographic location become that much more unraveled. It's not that the state cannot follow those threads, rather that when it does it takes leave and becomes something else."Here, the problematization of mapping geographical space onto what Bratton calls a "cloud-based computing platform," which I take to mean something more eloquent but not that distinct from "cyberspace," causes the political categories of the past to come undone.

The scales that we are accustomed to using are no longer useful for planning and demarcating the future and working with computational entities. I daresay that the political powers of most liberal democracies are only slowly reckoning with their falling behind in terms of understanding the contemporary infrastructural playing field. As Bratton, in my view, correctly points out, many1 (nation-)states are not even equipped to contend with or regulate these new types of political actors, whose raison d'être is increasingly less conventional and geographical in the sense of the 20th century Ancien Régime. While states are nonetheless still important and relevant political categories, they do not have the same kind of power through demarcation that they used to have. States have trained themselves to see at certain political and social altitudes, using a specific set of well-worn techniques to do so. Although these techniques are power in their own right, they have the capacity to blind their users to events outside their field of vision.

A further part of my hypothesis is that this problem of scales and vantage points arises from the insufficiencies and untimeliness of the categories and metaphors that we have at our disposal for making sense of our lived experiences "on the Internet. " If you Google (or verb any other search function) "how does the Internet work" you get a few decent results for understanding the Internet as a network of networks that are connected to each other through routers. Furthermore you will learn that you can address any computer in this network of networks using Internet Protocol (IP) addresses. This explanation is fine if the purpose of your article is to teach people how to program web applications or set up a small home network, which is what most people will do with this information. If your goal, however, is to really get people to understand the scale of the infrastructure that they are using to access the information that they are reading, this explanation is rather unsatisfactory. For one thing the information about the structure and hierarchy of transport networks is antiquated, but more on that later. One scale and vantage point that, to me, is very obviously lacking, is the control plane, i.e., the view of the Internet in which the decisions concerning the actual routing of packets can be observed. Again, more on this shortcoming later.

Before getting into the nitty-grittiness of Internet engineering that I am sure you, dear reader, cannot wait to delve into, I would like to meet on common ground. When we refer to what the we as an average User can see happening "on the Internet" we are usually talking about what we experienced "on the Web. " We usually access "the Web" through a browser or on "an app" such as Twitter or Facebook. Most of the ways we experience the powers of Internet infrastructure are by using this infrastructure to communicate with other people, or what we think are people, by sending and receiving media files. Note that this communication can be, and is, very very often one-sided, i.e., you as a consumer do not explicitly communicate feedback back to the producer or distributor of a communique; most people are lurkers. As such, what is often construed as "the Internet" is in fact just something happening among a select and small group of people in a very specific niche on a rather specific single social network. Yes, it's happening "on the Internet," but in this case "the Internet" is just another social space in which social dramas are played out.

Saying that something happened "on the Internet" is not really wrong as much as it is misrepresenting the agency whereby the event occurred. While platforms, especially networked platforms, thrive by generating "events" and content, these network products are usually the result of a very human experience of human interactions and relations. "The Internet" appears as a space in which these interactions occur. Moreover, these interactions are usually impossible outside of the context of a networked platform. Hereby it is what we are usually recognizing is a very high-level manifestation of what is happening on the infrastructural substrate of the Internet. And I'm here to tell you a story about this infrastructural substrate and what kind of developments are happening deep down "on the Internet" at the control plane.

Fun Nitty Gritty of Internet Infrastructure and the Control Plane

We've established that the Internet is a network of networks, but not all networks are created equal. Each individual network that constitutes the Internet is bounded as a network because it is administered by a single discrete entity. Now, this entity that administers a network may be one of many beast. It could be

- an Internet Service Provider (ISP), a company that specializes in connecting paying customers to its own network and thus to the wider Internet infrastructure so that these customers can access various services inside and outside the ISP's network;

- an Internet company (Google, Facebook, Amazon, Akamai, Netflix, Alibaba) that provides content or services, sometimes to paying customers but more usually in exchange for customer data;

- a government organisation (think NSA, CIA, DOD, etc.) that has an interest in strictly controlling its internal information flows in the name of security;

- a university or other large institution that wishes to self-administer its Internet presence and define how its Users access its services;

- a transit provider, a type of Internet Service Provider whose main function it is to act as an intermediary between other Internet entities and their networks, and as such is in charge of transmitting large volumes of data from network to another.

The perspective on the Internet as a network of connected networks is called the control plane. One of the best kept secrets of Internet engineers is the existence of the Border Gateway Protocol (BGP), the protocol that is used to connect two distinct networks and thus enables the transmission of data between them. Even though two networks may be different sizes they interact with each other as equals, i.e., they are peers. As such, networks connect to each other by peering with each other. Each network in a peering relationship might configure and run their internal networks differently, but when it comes to interacting with each other they have to speak in a mutually distinguishable language. This common language is codified in the Border Gateway Protocol.

At this point I would like to introduce a key piece of vocabulary that is ubiquitous in the realm of Internet engineering but barely known outside of it: the Autonomous System (AS), the name for a network as an administrative unit used in the context of the Border Gateway Protocol. Simply put, an Autonomous System is a single network administered by a single entity that is the owner or administrator of a set of Internet Protocol (IP) addresses. As you are probably meekly aware, an Internet Protocol address identifies a single computer and makes it addressable to the rest of the Internet (actually IP addresses are mapped to network interfaces, but let's not get ahead of ourselves). Each AS is assigned a set of IP addresses and each AS is responsible for ensuring the addressability of each computer within its network. If you've been following along using the idea of a network as an administrative unit within the Internet, then just think of an AS as that network, and within each network / AS there exist computers that have IP addresses. I'm asking you to do this slight bit of intellectual labour because I would like to highlight use of "Autonomous" in "Autonomous System."

"Autonomous" in this sense means that the entity responsible for an AS is really free to configure the AS's internal settings however it wishes to. Each AS is assigned a set number of Internet Protocol (IP) addresses that it is responsible for and that don't change much. These addresses need to be unique. Generally, every device that is connected on an AS has a unique IP address that is assigned to it by the entity running the AS. If more than one device share the same IP address, then each device will receive each the other's traffic along with its own traffic. It is up to the AS administrator to ensure that this does not happen.

On a fundamental level the administrator of an AS is responsible for facilitating two things:

- enabling each device that has an IP address within its network (AS) to send messages to any other device with an IP address in any other network (AS)

- enabling each device that has an IP address within its network (AS) to receive messages from any other device with an IP address in any other network (AS)

It is assumed that the steward of an AS wants to perfect the AS's quality of service by offering fast transit through its network, experienced by the end-User as fast loading times for 'content' or low latency. This assumption, however, doesn't that mean that they can or will. As I have already outlined, although ASes are the standard administrative unit of the control plane they are not necessarily similar in their function, configuration, or reach.

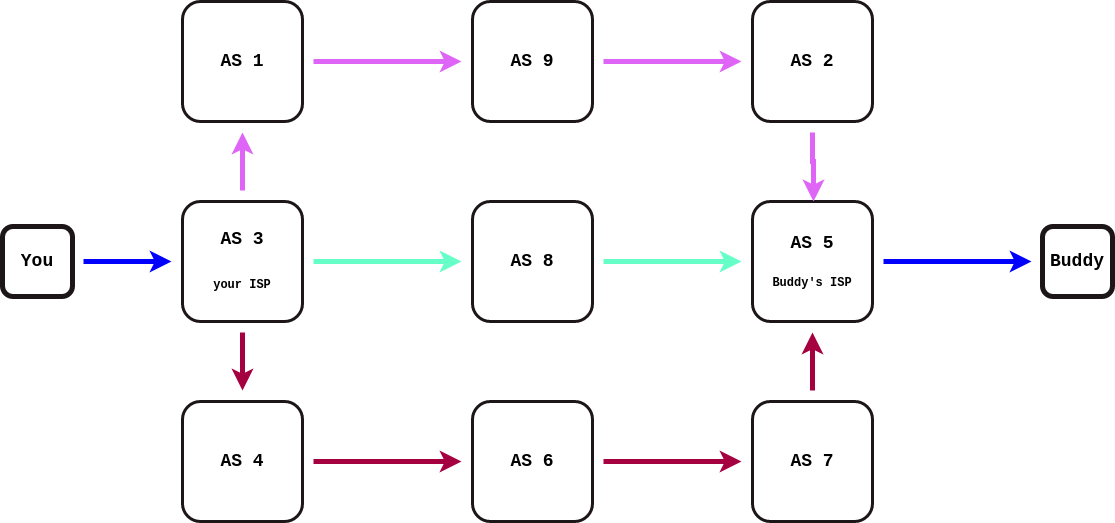

The image above illustrates how you would communicate with a buddy over the Internet, and how ASes are involved in this communication. Each AS has a number and is directly connected with only a few other ASes. For an Internet packet to travel from your computer to your buddy's, it would need to traverse several ASes on its way.

Fun History as an Example

Let's use a brief trip down memory lane to get a sense of what different forms ASes have taken and how they might appear today. If you're an oldish millenial or someone more ancient you might remember that you used to use the Internet to mainly write emails or read static content on web-pages that didn't change much. The underlying infrastructure that was supposed to support this low-bandwidth, small file-size form of communication was a hierarchical one. Let's use an email as an example. Your local Internet Service Provider (ISP) Autonomous System (AS) would send your message on to a slightly larger AS, which would send the message on to a transit provider AS. The transit provider would, for example, transport your email across an ocean or large land-mass to an AS that was geographically closer to your recipient. Eventually the email would wander through maybe a few more increasingly smaller ASes until it arrived at the AS that was responsible for the Internet Protocol (IP) address that your email was addressed to. That was the ideal model and it worked for all types of Internet messages, such as HTTP or DNS, not just email.

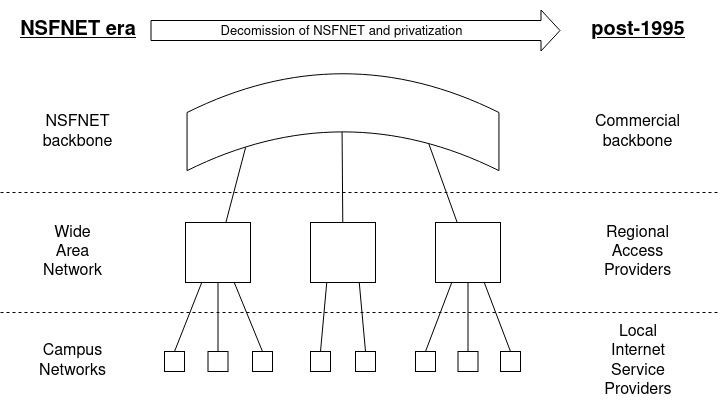

The diagram above illustrates this hierarchical Internet architecture, with the smallest ASes / networks at the bottom and the transit networks that are said to constitute the Internet backbone at the top. Typically, an Internet packet would first travel up the hierarchy and then down. The labels on the left and the right describe this hierarchy in two different eras: the labels on the left describe the scheme while the National Science Foundation Network (NSFNET), a network funded by the US Federal government, was active, up until 1995; the labels on the right describe the Internet during the early years of the commercial Internet after the NSFNET was decommissioned and privatized.

With the advent of larger files, such as movies or live-streams, this model of sending Internet packets up and then down the hierarchy of ASes becomes less and less feasible. The longer distances that the fragments of the file has to travel, the slower the whole endeavor becomes since the networks become congested, the computers administering the control have to do more work to keep everything happening at all, and as a consequence infrastructure costs rise. Today you probably use the Internet to watch movies or shorter-form videos, read newspaper articles, browse various content feeds, and to buy things. Gone are the days when people were precious with their bits and only sent and received text files. The underlying infrastructure of the Internet has evolved in order to both encourage and accommodate this change in User's behaviour. This transformation has been possible mainly because the vast majority of people who use the Internet today use the same services and access the same content as most other Users. Instead of millions of people hosting their private websites on some server running in your local nerd's basement, today most people access the Internet through platforms. Despite so much happening "on the Internet," the number of spaces that most things happen in is alarmingly small: "In 2019, more than half of Internet traffic originated from only 5 [HyperGiants], a significant consolidation of traffic since 2009, when it took the largest 150 ASes to contribute half the traffic, and 2007, when it took thousands of ASes" (source). "HyperGiants" is academese for big fuckin' network and this list of five comprises

- Netflix

- Akamai

- Amazon

You might not know who or what an Akamai is, but rest assured, they're a major player. In the late 1990s they came up with an idea called a content delivery network (CDN) that was the reason that in the last ten years we have been able to get used to HD video streaming until our eyes bleed. With the old, hierarchical Internet architecture, Internet packets needed to be passed up and then down the hierarchy, traversing several ASes / networks between sender and receiver. The people who founded Akamai and similar content delivery network companies reasoned that if you could get the content into the same AS as that from which Users were making requests, then you could reduce the distance packets had to travel and as a consequence the time needed to transport them. And so Akamai set up their own small servers inside foreign ASes / networks, usually those of smaller Internet service providers lower in the old hierarchy. On these servers Akamai stored the content that their customers, usually content producers or media companies, were paying them to distribute. This arrangement was supposed to benefit everyone involved: the foreign ASes didn't need to spend money and resources to request the content from the original source each time one of their Users wanted to access it because the content was already inside their network; the Users requesting this content got it faster; and the Akamai made money by specializing in optimizing routing and data paths across the whole Internet.

The only ones left out of this arrangement were transit networks or backbone providers, but other types of content delivery networks took advantage of the transit networks' position at the top of the routing hierarchy. Limelight is the main competitor to Akamai today and used a different strategy to maneuver its way into the business of content delivery network. Instead of partnering with a large and heterogeneous collection of foreign ASes / networks, Limelight partnered almost exclusively with large transit providers. They then attached a few large data-centers with immense computing and data transmission capacities very close to the entry points of these large networks with tremendous throughputs. Limelight's datacenters would then pump content directly into the main arteries of the Internet backbone, which would distribute this content down into smaller networks and towards Users requesting it. Similarly to Akamai's, Limelight's customers were media companies that did not want to build and maintain their own infrastructure for digital distribution.

Content delivery networks thus functioned as a neutral distribution and publishing platform for generic content; if you could send it over the Internet, content delivery networks could deliver it for you quicker and cheaper than if you set up and administered your own infrastructure. In the years prior to around 2015, every FAANG (Facebook (Meta), Amazon, Apple, Netflix, Google) company took advantage of the services of either Akamai or Limelight. From the early 2010s onwards, the FAANGs began building their own content delivery networks to increase the speed of delivery for just their content. In many ways, content delivery network technology enabled the rise of the platforms we have today because it was only by using this technology that the Web could appear to run as smoothly as it does today.

Along with the "tech companies" already mentioned, many other media companies outsourced their digital distribution and advertising infrastructure to one of two companies in the early 2000s. These smaller media and publishing companies, therefore, do not need their own ASes / networks to have a public presence on the Web and to distribute their content using the Internet's infrastructre; content delivery networks have thus become the infrastructural stewards for most major non-"tech" companies such as newspapers, tv stations, radio stations, video-hosting platforms, blogs, online shops, advertising companies, you name it. The degree to which content delivery networks are the increasingly monolithic maintainers of the current Internet's infrastructure becomes most apparent when they fail. Fastly, a smaller but nevertheless important content delivery network, went down for a couple of hours in the summer of 2021. CNN, the Guardian, the NY Times, Twitch, Pinterest, Hulu, Reddit, Spotify, and many other media companies used Fastly to deliver their digital content, and as a result the Web presence of these companies was not available for the duration of Fastly's down-time. It is becoming increasingly common for media companies to use several different content delivery networks, thus employing a so-called meta-CDN strategy, so that if one fails the others can pick up the slack and there is no severe disruption of availability.

I realize that it might seem like I've gotten slightly side-tracked from where this piece was once headed, but I promise you it's all been relevant for what I'm getting at. The current paradigm paradigm of Internet architecture, whereby Web platforms define what this architecture is, is a relatively new one. ASes / networks are still the administrative units that constitute the Internet, and each "tech company" controls one or a handful of ASes. Taken together all ASes, along with their various interconnections, constitute the Internet, but only five ASes are responsible for more than half the content that is distributed through the Internet's infrastructure. If you increase this number to around 20 ASes then you can account for about 80% of all Internet traffic. Long story short, the Internet is becoming increasingly centralized. This centralization is not apparent to most Users because the way they use the Internet is mainly through the Web, and on the Web there still appears to be a heterogeneous offering of content and diverse ecosystem of businesses and people. However, many of these content providers, businesses, and individuals use one of a handful of companies, such as Akamai, Limelight, or Fastly, to deliver their content for them. The fundamental infrastructure that is responsible for delivering content to Users, therefore, is not as decentralized as it appears, but is tending towards monopoly.

The consequences of this re-centralization of Internet infrastructure is manifold and they are specific to different entities that use the infrastructure. From the perspective of the "tech companies" that are increasingly the only ones that offer services using the Internet's infrastructure, this development is an enourmous boon. Less competition means the playing field is more clearly laid out, which makes it easier to develop business strategies and in the end make money. Companies such as Google and Meta / Facebook might even be eyeing more vertical integration into the infrastructure that they already use. These two companies are major investors in physical infrastructure projects, such as submarine or terrestrial cables, that are dedicated to exlusively transporting their data. The following is speculation on my part, but I wouldn't be surprised if either of these two companies started trying to buy up Internet service providers that are going broke. Doing so would get them even closer to the devices that they send content to in order to a) provider better "quality of service" and b) make it harder or impossible for competitors to use the devices that they control all the serving infrastructure behind. Users are not just locked into platforms that provide them content the content they see; these platforms lock in Users